潘則佑

羅力勻

葉中瑋

李哲瑋

廖灝添

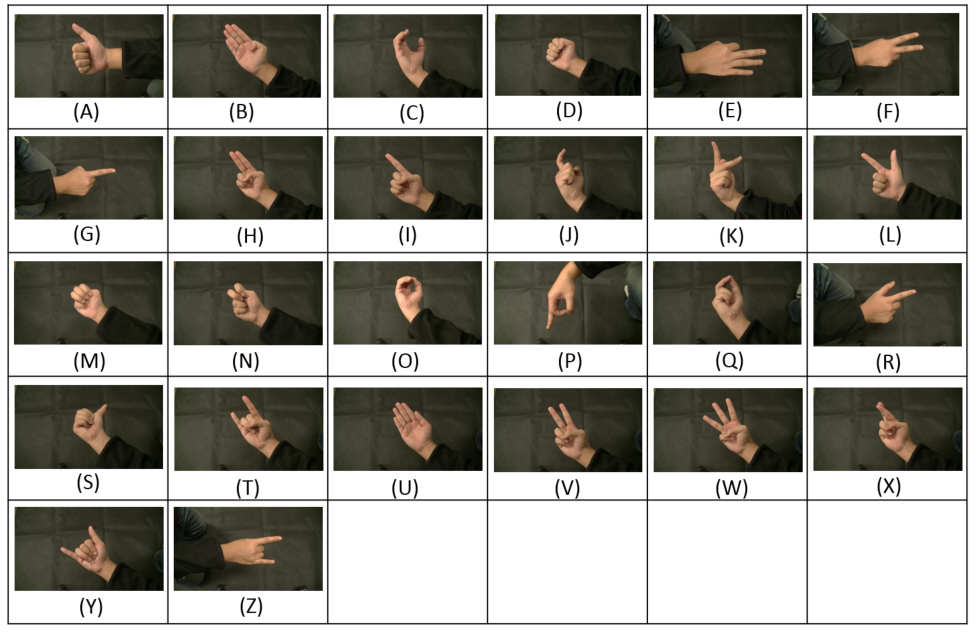

Cameras are embedded in many mobile/wearable devices and can be used for gesture recognition or even sign language recognition to help the deaf people communicate with others. In this paper, we proposed a vision-based gesture recognition system which can be used in environments with complex background. We design a method to adaptively update the skin color model for different users and various lighting conditions. Three kinds of features are combined to describe the contours and the salient points of hand gestures. Principle Component Analysis (PCA), Linear Discriminant Analysis (LDA), and Support Vector Machine (SVM) are integrated to construct a novel hierarchical classification scheme. We evaluated the proposed recognition method on two datasets: (1) the CSL dataset collected by ourselves, in which images were captured in complex background. (2) The public ASL dataset, in which images of the same gesture were captured in different lighting conditions. Our method achieves the accuracies of 99.8% and 94%, respectively, which outperforms the existing works.

@INPROCEEDINGS{7544998, author={T. Y. Pan and L. Y. Lo and C. W. Yeh and J. W. Li and H. T. Liu and M. C. Hu}, booktitle={2016 IEEE Second International Conference on Multimedia Big Data (BigMM)}, title={Real-Time Sign Language Recognition in Complex Background Scene Based on a Hierarchical Clustering Classification Method}, year={2016}, pages={64-67}, month={April},}

Request form link