吳敏慈

潘則佑

蔡菀倫

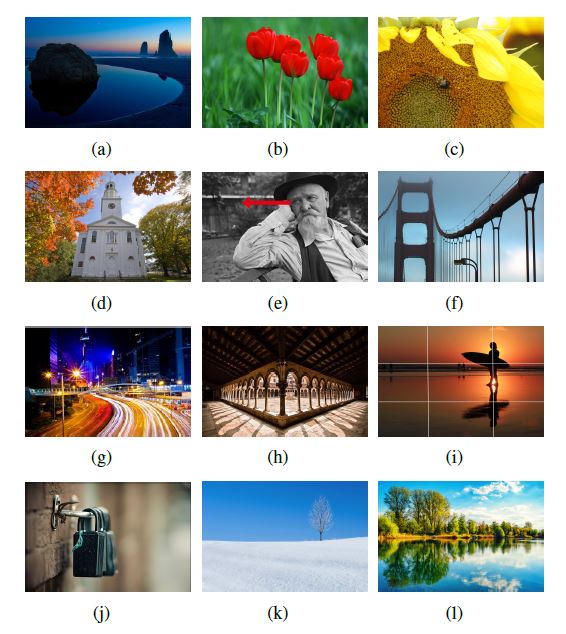

In order to take better photos, it is a fundamental step for the beginners of photography to learn basic photo composition rules. However, there are no tools developed to help beginners analyze the composition rules in given photos. Thus, in this study we developed a system with the capability to identify 12 common composition rules in a photo. It should be noted that some of the 12 common composition rules have not been considered by the previous studies, and this deficit gives this study its significance and appropriateness. In particular, we utilized two deep neural networks (DNN), AlexNet and GooLeNet, to extract high-level semantic features for facilitating the further analysis of photo composition rules. In order to train the DNN model, we constructed a dataset, which is collected from DPChallenge, Flickr, and Unsplash. All the collected photos were later labelled with 12 composition rules by a wide range of raters recruited from Amazon Mechanical Turk (AMT). The representative features of each composition rule were further visualized in our system. The results showed the feasibility of the proposed system and revealed the possibility of using this system to assist potential users to improve their photographical skills and expertise.

@INPROCEEDINGS{8026247, author={Min-Tzu Wu and Tse-Yu Pan and Wan-Lun Tsai and Hsu-Chan Kuo and Min-Chun Hu}, booktitle={2017 IEEE International Conference on Multimedia Expo Workshops (ICMEW)}, title={High-level Semantic Photographic Composition Analysis and Understanding with Deep Neural Networks}, year={2017}, pages={279-284}, month={July},}

Request form link