景璞

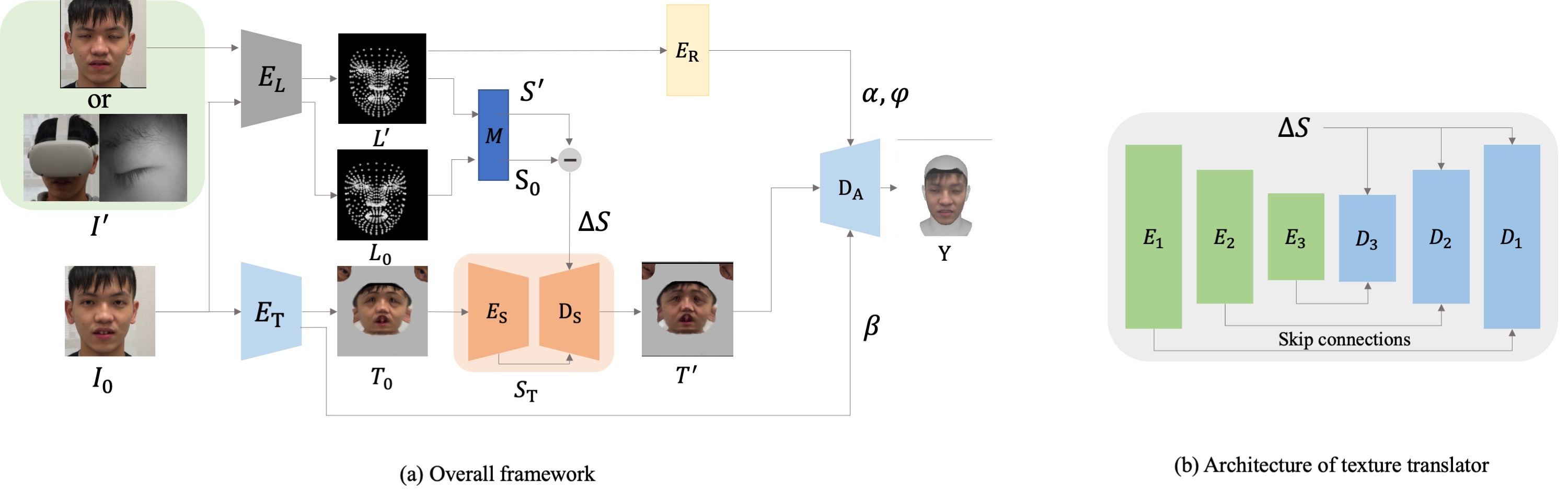

We propose a 2D landmark-driven 3D facial animation framework trained without the need of 3D facial dataset. Our method decomposes the 3D facial avatar into geometry and texture. Given 2D landmarks as input, our models learn to estimate the parameters of FLAME and transfer the target texture into different facial expressions. The experiments show that our method achieves remarkable results. Using 2D landmarks as input data, our method has the potential to be deployed in a scenario that suffered from obtaining full RGB facial images (e.g., occluded by VR Head-mounted Display).

@inproceedings{ching2023sofa,

title={SOFA: Style-based One-shot 3D Facial Animation Driven by 2D landmarks},

author={Ching, Pu and Chu, Hung-Kuo and Hu, Min-Chun},

booktitle={Proceedings of the 2023 ACM International Conference on Multimedia Retrieval},

pages={545--549},

year={2023}

}