景璞

陳文正

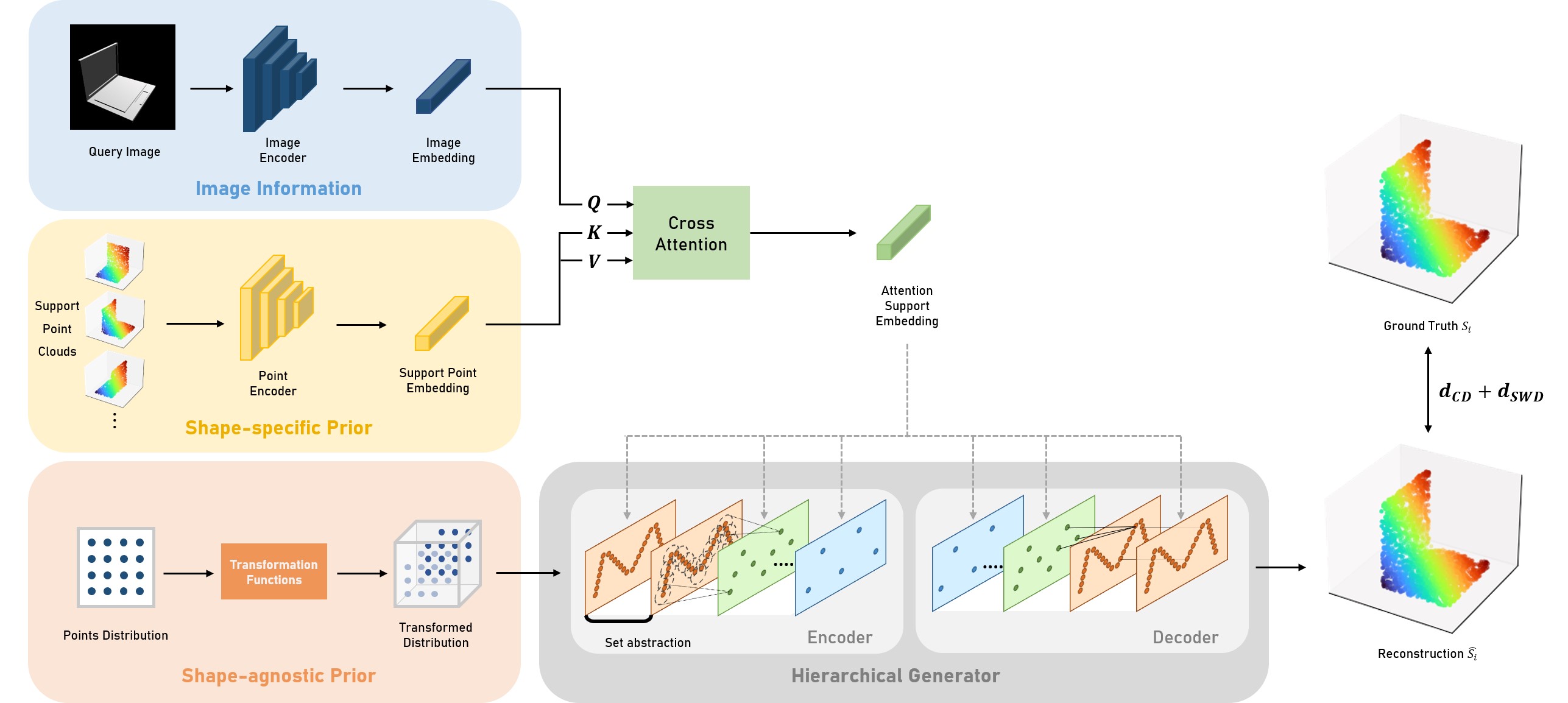

Point cloud is a common representation of 3D structures. Beyond common applications, such as autonomous driving, robotic navigation, etc., synthesizing point clouds with a given single image has shown the potential to provide a sim- ple but efficient solution in 3D modeling. However, most deep learning models for generating point clouds suffer from the domain gap between the training and testing set, making it impractical to apply in the 3D content creation scenario. Few shot-based methods have been proposed to utilize 3D proto- types to bridge the domain gap. Despite the promising results of the state-of-the-art approach, some issues, such as mode- collapse and local sparseness, still remain unsolved. In this work, we propose an Instance-aware Feature Fusion mod- ule with an attention mechanism to improve the reconstruc- tion quality in the few-shot scenario. Furthermore, we in- troduce a two-stage Hierarchical Point Cloud Generator and use Sliced Wasserstein Distance (SWD) as an extra training objective to solve the local-sparsity problem. Experimental results show that our method outperforms the existing state- of-the-art method on the ModelNet and ShapeNet datasets.

@inproceedings{ching2024i3fnet,

title={I3FNET: Instance-Aware Feature Fusion for Few-Shot Point Cloud Generation from Single Image},

author={Ching, Pu and Chen, Wen-Cheng and Hu, Min-Chun},

booktitle={2024 IEEE International Conference on Multimedia and Expo Workshops (ICMEW)},

pages={1--6},

year={2024},

organization={IEEE}

}