蔡岱臻

潘則佑

In recent years, the evolution of data transmission has created real-time streaming services. It enables us to be closely bound up with digital information all the time and brings about a fast-paced society. In a fast-paced life, the information is deconstructed into fragments, and the user's stimulus is also enhanced. Moreover, the monitoring tool behind the media interface has been taking extensive samples of the user's behavior and physiological data. By doing so, the system looks for the most effective approach to manage the user's attention and the affective state through the repetitive procedure of stimulating, sampling, analyzing, and concluding. In this scenario, could the user still master their attention and affective state and maintain their subjectivity? Or, would the user become a data generator of stimulus-response under the long-term suppression from the system?

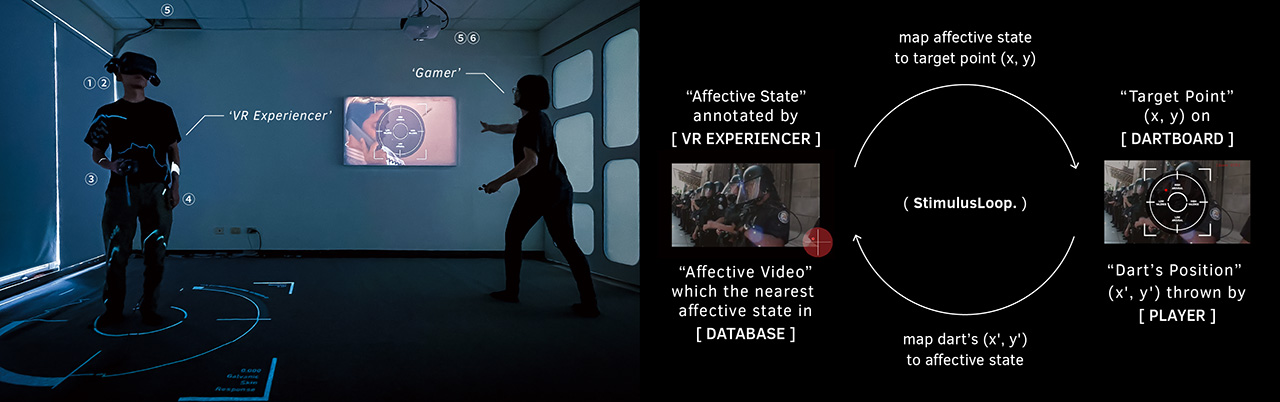

Following the above questions, this research applies the relationship between the system and the user as an interactive framework. It is a technology art creation that involves quantitative experiments. With the design of the investigation, it is going to explore the relevance of which formats of video could evoke the user's affective state in the environment of virtual reality. Besides, to evoke the audience to think of the nature of the problem, the experiment data is used as the reference for the interactive system to make the interactive system oscillate between fiction and experiment. On the other hand, in terms of displaying the system, the game-actuated mechanism for the participants reveals the relation between the system and the user, and the behavior data and affective state of the participant are visualized. It aims to expose the system and provide the possibility to acknowledge and reflect on the technology.

@inproceedings{tsai2022stimulusloop,

title={StimulusLoop: Game-Actuated Mutuality Artwork for Evoking Affective State},

author={Tsai, Tai-Chen and Pan, Tse-Yu and Hu, Min-Chun and Tao, Ya-Lun},

booktitle={Proceedings of the 30th ACM International Conference on Multimedia},

pages={7245--7247},

year={2022}

}